|

Size: 9917

Comment:

|

Size: 14540

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 32: | Line 32: |

| (Don't know how to insert figure here) | <<BR>> {{attachment:example1.jpg||width="640"}} <<BR>><<BR>> |

| Line 42: | Line 42: |

| <<BR>> {{attachment:Figure2.jpg||width="640"}} <<BR>><<BR>> Figure: Cascaded architecture to produce the final output. For each input image, the first network segments the brain anatomy and potential tumors, while the second network subdivides tumors into 3 classes. Post-processing for refining tumor structure will be executed if multi-modal is available. |

|

| Line 44: | Line 49: |

| mri_TumorSynth is pre-packaged with FreeSurfer v7.4.0 and onwards – no additional installation is required if you have this version or later. For older FreeSurfer versions: |

For running mri_TumorSynth follow the instructions in this file to create a virtual python environment with all the required dependencies and download the model files required for inference. = Prerequisites = 1. Install conda and locate the path to the activation file, this will be named 'conda.sh' * Instructions for installing conda can be found on their [[https://conda.io/projects/conda/en/latest/user-guide/install/index.html | site here ]] * Once conda is installed, you can locate the path to your 'conda.sh' script by running one of the following commands: <<BR>> {{{bash }}} {{{# Linux }}} {{{cat ~/.bashrc | grep conda.sh }}} <<BR>> {{{zsh }}} {{{# MacOS (if conda was initialized for zsh) }}} {{{cat ~/.zshrc | grep conda.sh }}} <<BR>> 2. Download the model file dependencies * The model file is available for download from the [[https://liveuclac-my.sharepoint.com/:u:/g/personal/rmapfpr_ucl_ac_uk/EWsIGJOFbD9MiPyQnGhjGHwBquaWhxJfEAzbfs6v5BvFzA?e=JQHlSQ|TumorSynth Weights Download Page]]. * Navigate to the above link and click the 'Download' button in the bottom right of the window * Once the zip file containing the model is downloaded, unpack it. This can be done with the 'Archive Utility' on MacOS, or with the command below on either a Linux or MacOS system, after navigating to the directory that contains the zip file: ```bash unzip Task002_StrokesLong.zip ``` * The above command will create unpack the zip file, and create a directory named 'Task002_StrokesLong' containing the nnUNet model dependencies. Note the location of this directory, as we will need to pass the location to the installation script ## Installation script Once the prerequisites have been met, you can run 'create_nnUNet_v1.7_env.sh' to setup the nnUNet environment. The script will create a conda env with the tested version of python and pytorch, clone and install the version of nnUNet used to train the model, create the directory structure to hold the data and model files for nnUNet, generate a file to source with the env vars required by nnUNet to find the data. The following args will need to be passed to the 'create_nnUNet_v1.7_env.sh' script to correctly configure the nnUNet environment: * The location of your 'conda.sh' script * The location of the model file that you have downloaded an unpacked * The name you want to give the conda env * The location where you would like to save the trained models and data Example command: ```bash ./create_nnUNet_v1.7_env.sh \ -e linux/etc/profile.d/conda.sh \ # path to conda.sh script -m Downloads/Task002_StrokesLong \ # path to unpacked model -n nnUNet_v1.7 \ # name to give the conda env -d nnUNet_paths # root of the nnUNet data/model tree -c # if passed, install cuda ``` NOTE: Do not pass the -c flag on a Mac, cuda is not available for MacOS When the script finishes running (which could take a bit of time), you will have a new conda environment with the name passed to the -n flag. A file will also be created in your pwd, named 'nnUNet_v1.7_path.sh' that contains the env vars that need to be defined for nnUNet to function properly. Source that file to set the required env vars. To have the nnUNet env vars set automatically when the conda env is activated, move a copy of the nnUNet_v1.7_path.sh into the 'activate.d' directory in your conda env. With the newly created conda env active, run the following commands to create the 'activate.d' directory for the conda env and move a copy of the 'nnUNet_v1.7_path.sh' file there: ```bash mkdir -p $CONDA_PREFIX/etc/conda/activate.d ``` ```bash cp nnUNet_v1.7_path.sh $CONDA_PREFIX/etc/conda/activate.d ``` = For older FreeSurfer versions: = |

| Line 53: | Line 149: |

| * Download the TumorSynth module from the FreeSurfer repository: [[https://surfer.nmr.mgh.harvard.edu/fswiki/TumorSynth|TumorSynth Download Page]]. | * Download the TumorSynth module from the FreeSurfer repository: - TumorSynth module [[#|TumorSynth Download Page]] - TumorSynth model weights [[https://mega.nz/file/Ph4U0JpA#KMO-hybcDdn_0J5KciI1HO_zYbNPsED_xnHhmCdqoBk|TumorSynth Weights Download Page]]. |

| Line 103: | Line 203: |

| <<BR>> {{attachment:Figure3.jpg||width="640"}} <<BR>><<BR>> Figure: TumorSynth’s performance on example cases. Post-processing for refining tumour structure will be executed if multi-modal is available. N/A stands for missing this modality when doing the inference. |

Contents

mri_TumorSynth

mri_TumorSynth - To segment the healthy brain tissue and tumor in MR scans with tumor.

Update November 2025: mri_TumorSynth v1.0 is now available as part of FreeSurfer (v7.4.0 onwards), fully compatible with existing FreeSurfer pipelines.

Update December 2025: Added support for multi-sequence MR inputs (T1, T1CE, T2, FLAIR) – see Usage section for details.

Author/s: Jiaming Wu

E-mail: jiaming.wu.20 [at] ucl.ac.uk

Rather than directly contacting the author, please post your questions on this module to the FreeSurfer mailing list at freesurfer [at] nmr.mgh.harvard.edu

General Description

mri_TumorSynth is a convolutional neural network-based tool designed for integrated segmentation of healthy brain tissue and brain tumors in MR scans containing tumors. It operates out-of-the-box with minimal user input, supporting clinical and research workflows focused on brain tumor analysis.

Key features of mri_TumorSynth:

Dual-mode segmentation: Whole-tumor + healthy tissue (--wholetumor) and fine-grained inner tumor substructures (--innertumor) following BraTS criteria. Robust to variable scan qualities: Works with clinical MR sequences (T1, T1CE, T2, FLAIR etc.) of different resolutions and contrasts.

FreeSurfer-compatible: Output labels align with FreeSurfer’s anatomical classification for seamless integration with downstream pipelines (e.g., volume analysis, visualization). Efficient processing: Runs on both GPU (10s per scan) and CPU (3 minutes per scan) with minimal computational overhead.

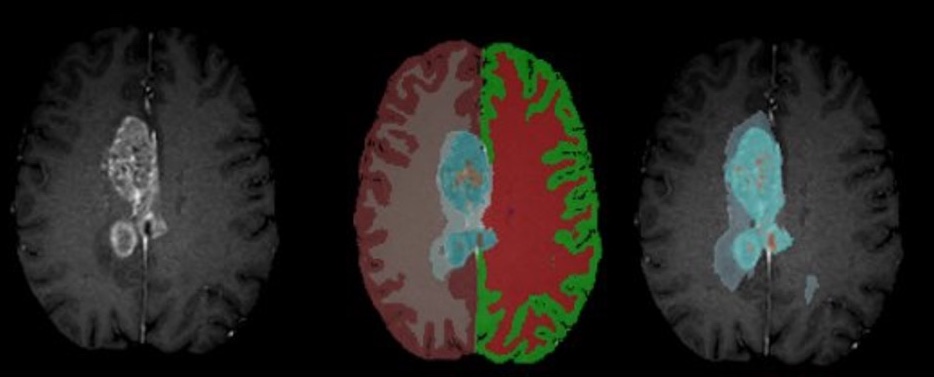

Figure: Example segmentation results – Input T1CE scan (left), healthy tissue + whole tumor mask (middle), inner tumor substructures (right).

New Features (December 2025)

Added multi-sequence input support: Now accepts combined T1CE+T2+FLAIR inputs for improved segmentation accuracy (see Usage section).

Enhanced robustness to low-resolution scans (≤2mm isotropic): Internal resampling and augmentation reduce error in clinical datasets. Volume output integration: Automatically computes and saves volumes of segmented structures (healthy tissue + tumor) when using the --vol flag (new optional argument).

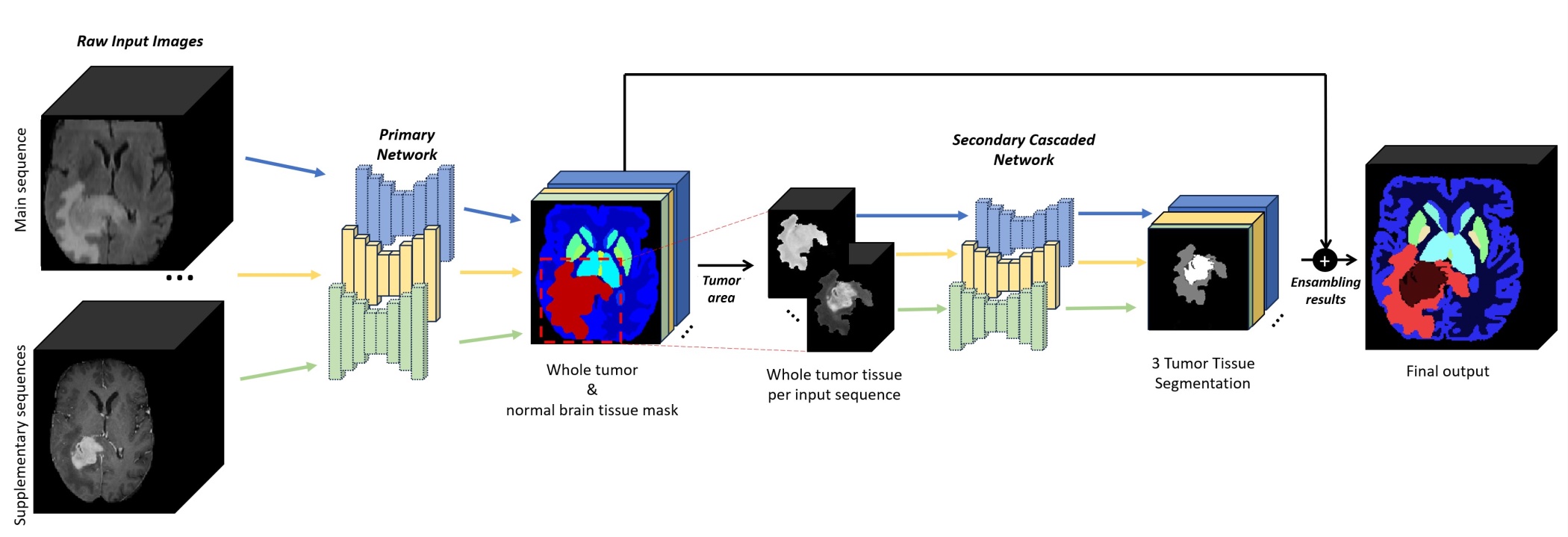

Figure: Cascaded architecture to produce the final output. For each input image, the first network segments the brain anatomy and potential tumors, while the second network subdivides tumors into 3 classes. Post-processing for refining tumor structure will be executed if multi-modal is available.

Installation

For running mri_TumorSynth follow the instructions in this file to create a virtual python environment with all the required dependencies and download the model files required for inference.

Prerequisites

1. Install conda and locate the path to the activation file, this will be named 'conda.sh'

Instructions for installing conda can be found on their site here

- Once conda is installed, you can locate the path to your 'conda.sh' script by running one of the following commands:

bash

# Linux

cat ~/.bashrc | grep conda.sh

zsh

# MacOS (if conda was initialized for zsh)

cat ~/.zshrc | grep conda.sh

2. Download the model file dependencies

The model file is available for download from the TumorSynth Weights Download Page.

- Navigate to the above link and click the 'Download' button in the bottom right of the window

- Once the zip file containing the model is downloaded, unpack it. This can be done with the 'Archive Utility' on MacOS, or with the command below on either a Linux or MacOS system, after navigating to the directory that contains the zip file:

`bash unzip Task002_StrokesLong.zip `

- The above command will create unpack the zip file, and create a directory

named 'Task002_StrokesLong' containing the nnUNet model dependencies. Note the location of this directory, as we will need to pass the location to the installation script

Once the prerequisites have been met, you can run 'create_nnUNet_v1.7_env.sh' to setup the nnUNet environment. The script will create a conda env with the tested version of python and pytorch, clone and install the version of nnUNet used to train the model, create the directory structure to hold the data and model files for nnUNet, generate a file to source with the env vars required by nnUNet to find the data.

The following args will need to be passed to the 'create_nnUNet_v1.7_env.sh' script to correctly configure the nnUNet environment:

* The location of your 'conda.sh' script * The location of the model file that you have downloaded an unpacked * The name you want to give the conda env * The location where you would like to save the trained models and data

Example command:

`bash ./create_nnUNet_v1.7_env.sh \

- -e linux/etc/profile.d/conda.sh \ # path to conda.sh script

-m Downloads/Task002_StrokesLong \ # path to unpacked model -n nnUNet_v1.7 \ # name to give the conda env -d nnUNet_paths # root of the nnUNet data/model tree -c # if passed, install cuda

`

NOTE: Do not pass the -c flag on a Mac, cuda is not available for MacOS

When the script finishes running (which could take a bit of time), you will have a new conda environment with the name passed to the -n flag. A file will also be created in your pwd, named 'nnUNet_v1.7_path.sh' that contains the env vars that need to be defined for nnUNet to function properly. Source that file to set the required env vars.

To have the nnUNet env vars set automatically when the conda env is activated, move a copy of the nnUNet_v1.7_path.sh into the 'activate.d' directory in your conda env.

With the newly created conda env active, run the following commands to create the 'activate.d' directory for the conda env and move a copy of the 'nnUNet_v1.7_path.sh' file there:

`bash mkdir -p $CONDA_PREFIX/etc/conda/activate.d `

`bash cp nnUNet_v1.7_path.sh $CONDA_PREFIX/etc/conda/activate.d `

For older FreeSurfer versions:

* Ensure Python 3.8+ is installed on your system.

* Run pip install tensorflow==2.10.0 (CPU or GPU version; GPU requires CUDA 11.2+ and CuDNN 8.1+).

* Download the TumorSynth module from the FreeSurfer repository:

- TumorSynth module TumorSynth Download Page

- TumorSynth model weights TumorSynth Weights Download Page.

* Extract the module to your FreeSurfer bin directory (e.g., /usr/local/freesurfer/bin).

Note: The tool will automatically detect GPU compatibility on first run. For faster processing, use a GPU with compatible drivers (no additional configuration needed for FreeSurfer v7.4.0+).

Synopsis

mri_TumorSynth --i inputvol [--i2 inputvol2 --i3 inputvol3] --o outputvol [--wholetumor --innertumor --vol volumefile --cpu --threads <threads>]

Arguments

Required Arguments

--i inputvol |

Input volume |

Path to primary MR scan (required; T1CE recommended for best results). Supports NIfTI (.nii/.nii.gz) and FreeSurfer (.mgz) formats. |

--o outputvol |

Output volume |

Path to save segmentation mask (same format as input). |

Optional Arguments

--i2 inputvol2 |

Second input volume |

Optional: Path to secondary MR sequence (e.g., T2). Use with --i3 for multi-sequence input. |

--i3 inputvol3 |

Third input volume |

Optional: Path to tertiary MR sequence (e.g., FLAIR). For use with --i and --i2. |

--wholetumor |

Whole-tumor + healthy tissue mode |

Outputs combined mask of healthy brain tissue and whole tumor (includes edema, enhancing tumor, non-enhancing tumor). Input must be skull-stripped and registered to SRI-24 template. |

--innertumor |

Inner tumor substructure mode |

Outputs BraTS-compliant subclasses: Tumor Core (TC), Non-Enhancing Tumor (NET), and Edema. Input must be a tumor ROI image (prepare by multiplying raw scan with --wholetumor output mask). |

--vol volumefile |

Volume output file |

Path to CSV file for saving volumes of all segmented structures (supports single subject or batch processing). |

--cpu |

Force CPU processing |

Bypasses GPU detection and runs on CPU (slower but compatible with systems without GPU). |

--threads <threads> |

Number of CPU threads |

Number of cores to use for CPU processing (default: 1; max: number of system cores). |

Usage

mri_TumorSynth supports three primary usage scenarios, with flexibility for single or multi-sequence inputs:

Basic Usage (Single Sequence)

Whole-tumor + healthy tissue segmentation (primary use case):

mri_TumorSynth --i t1ce_skullstripped.mgz --o tumor_whole_healthy_mask.mgz --wholetumor

Inner tumor substructure segmentation (requires tumor ROI input):

mri_TumorSynth --i t1ce_tumor_roi.nii.gz --o tumor_inner_substructures.nii.gz --innertumor

Multi-Sequence Usage (Improved Accuracy)

Combine T1CE, T2, and FLAIR for better segmentation of heterogeneous tumors:

mri_TumorSynth --i t1ce.nii.gz --i2 t2.nii.gz --i3 flair.nii.gz --o tumor_multi_seq_mask.mgz --wholetumor --vol tumor_volumes.csv

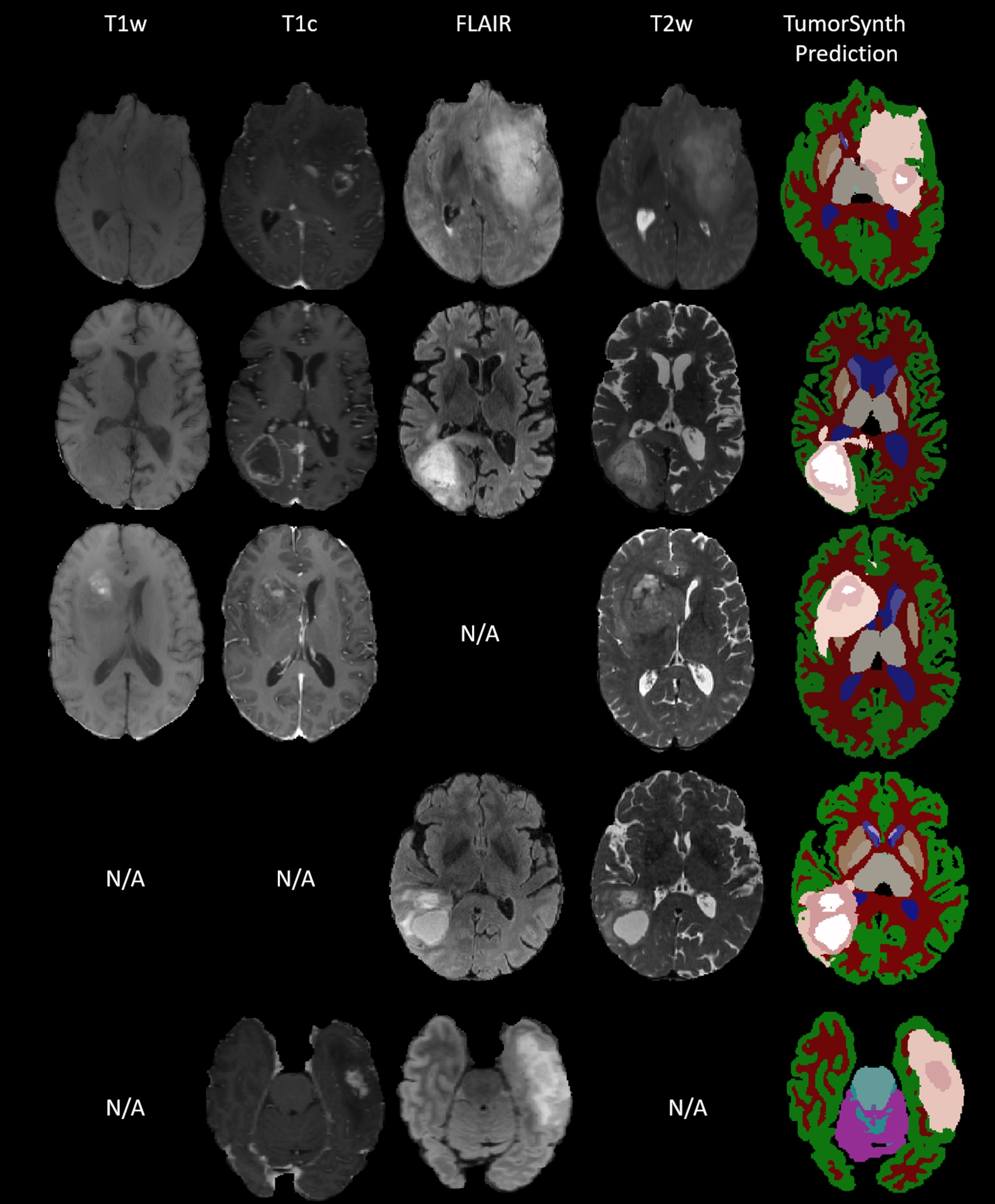

Figure: TumorSynth’s performance on example cases. Post-processing for refining tumour structure will be executed if multi-modal is available. N/A stands for missing this modality when doing the inference.

Batch Processing

To process multiple scans, use a text file with input paths (one per line) and specify a corresponding output text file:

mri_TumorSynth --i input_scans.txt --o output_masks.txt --wholetumor --vol batch_volumes.csv --threads 8

Input text file (input_scans.txt) format: each line is a path to a skull-stripped, SRI-24-registered scan.

Output text file (output_masks.txt) format: each line is the path to save the corresponding segmentation mask.

Examples

Example 1: Whole-Tumor + Healthy Tissue Segmentation (Single Sequence)

mri_TumorSynth --i t1ce_skullstripped_SRI24.nii.gz --o t1ce_whole_tumor_healthy_mask.nii.gz --wholetumor

Input: Skull-stripped T1CE scan registered to SRI-24 template.

Output: Mask containing healthy brain tissue (e.g., white matter, gray matter, CSF) and whole tumor (edema + enhancing + non-enhancing tumor).

Example 2: Inner Tumor Substructure Segmentation

First, prepare the tumor ROI input (using --wholetumor output):

fslmaths t1ce_raw.nii.gz -mul t1ce_whole_tumor_healthy_mask.nii.gz t1ce_tumor_roi.nii.gz

Then run inner tumor segmentation:

mri_TumorSynth --i t1ce_tumor_roi.nii.gz --o t1ce_inner_tumor_substructures.nii.gz --innertumor

Output: BraTS-compliant labels for Tumor Core (TC), Non-Enhancing Tumor (NET), and Edema.

Example 3: Multi-Sequence Segmentation with Volume Output

mri_TumorSynth --i t1ce.nii.gz --i2 t2.nii.gz --i3 flair.nii.gz --o tumor_multi_seq_mask.mgz --wholetumor --vol tumor_volumes.csv --threads 4

Outputs: Segmentation mask + CSV file (tumor_volumes.csv) with volumes of all healthy tissues and whole tumor.

List of Segmented Structures

Segmentation labels follow FreeSurfer’s anatomical classification for healthy tissue and BraTS criteria for tumor substructures. ![]()

Table: Label values and corresponding structures for --wholetumor (left) and --innertumor (right) modes.

Frequently Asked Questions (FAQ)

Do I need to preprocess input scans?

For --wholetumor mode: Inputs must be skull-stripped and registered to the SRI-24 template (FreeSurfer’s recon-all or mri_robust_register can be used). No additional preprocessing (e.g., bias field correction, intensity normalization) is required.

For --innertumor mode: Inputs must be tumor ROI images (prepared by masking the raw scan with --wholetumor output).

What MR sequences are supported?

T1CE is the recommended primary sequence (--i). T2 and FLAIR can be added as secondary/tertiary inputs (--i2, --i3) for improved accuracy. The tool auto-detects sequence type and fuses information for segmentation.

Why is my segmentation misaligned with the input scan?

Misalignment occurs if the input is not registered to the SRI-24 template (required for --wholetumor mode). Re-register the input using mri_robust_register and re-run the tool. For non-FreeSurfer viewers, save the registered input with --resample (compatible with FreeSurfer v7.4.1+).

How can I speed up processing?

Use a GPU (default if compatible; 5-10x faster than CPU). For CPU processing: Increase the --threads flag (e.g., --threads 8 for an 8-core machine).

Avoid multi-sequence input if speed is prioritized (single T1CE is fastest).

What file formats are supported?

Inputs and outputs: NIfTI (.nii/.nii.gz) and FreeSurfer (.mgz) formats. Volume outputs: CSV (.csv).

See Also

mri_synthseg, recon-all, mri_robust_register, mri_volstats

References

SynthSeg: Segmentation of brain MRI scans of any contrast and resolution without retraining. B Billot, et al. Medical Image Analysis, 83, 102789 (2023).

BraTS 2021 Challenge: Brain Tumor Segmentation Challenge.

Reporting Bugs

Report bugs to analysis-bugs@nmr.mgh.harvard.edu with the following information: FreeSurfer version (run freesurfer --version). Exact command used to run mri_TumorSynth. Error message (copy-paste full output). Input file details (sequence type, format, resolution).